DeepSeek's surprisingly affordable AI model challenges industry giants. While boasting a mere $6 million pre-training cost for its DeepSeek V3 model, a closer look reveals a far more substantial investment.

Image: ensigame.com

Image: ensigame.com

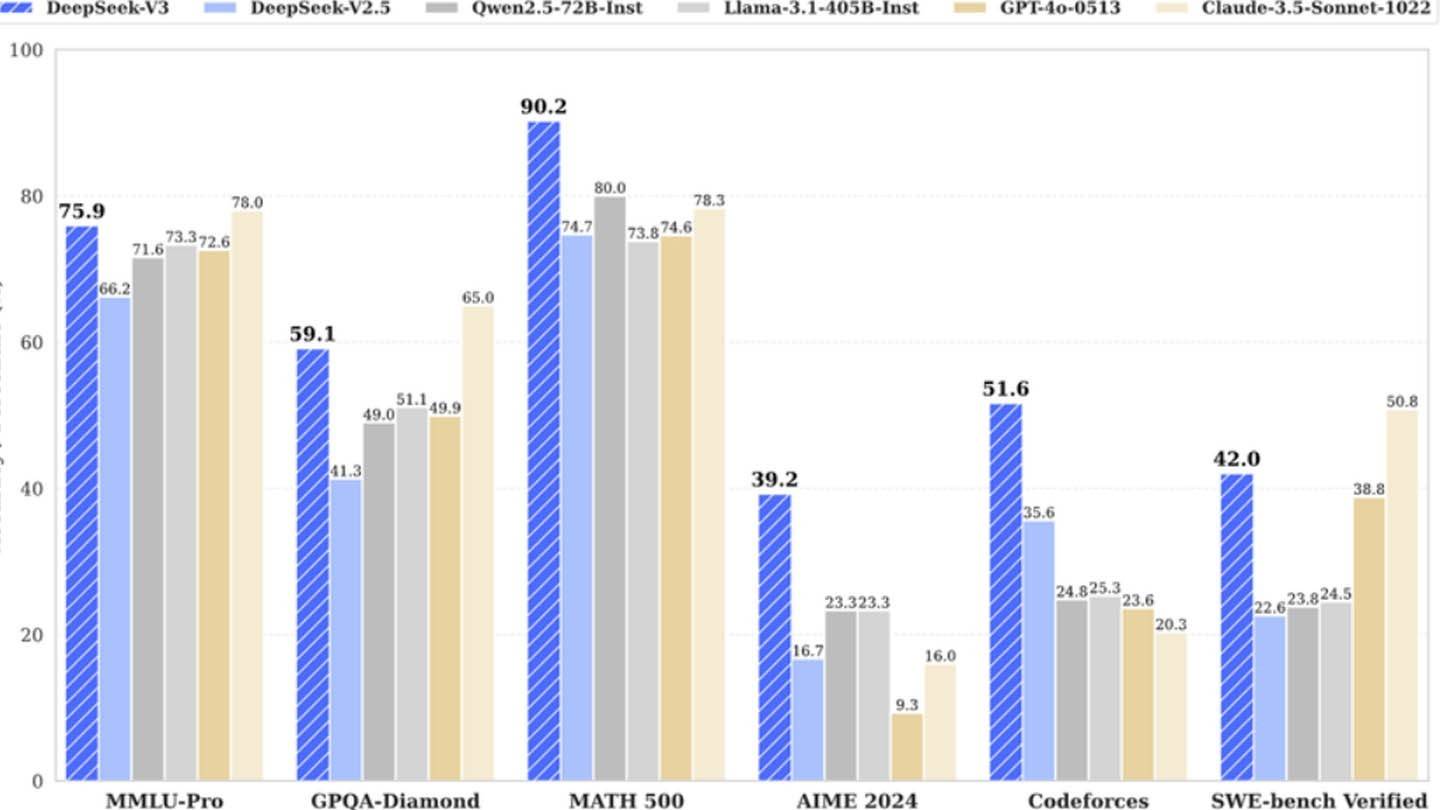

DeepSeek V3 leverages innovative technologies: Multi-token Prediction (MTP) for enhanced accuracy and efficiency; Mixture of Experts (MoE), utilizing 256 neural networks (eight activated per token); and Multi-head Latent Attention (MLA) for improved information extraction. These advancements contribute to its competitive performance.

Image: ensigame.com

Image: ensigame.com

However, SemiAnalysis exposed DeepSeek's use of approximately 50,000 Nvidia Hopper GPUs – a significant investment totaling roughly $1.6 billion in servers and $944 million in operational costs. This contradicts the initial $6 million claim, which only reflects pre-training GPU expenses. The true cost encompasses research, refinement, data processing, and infrastructure.

Image: ensigame.com

Image: ensigame.com

DeepSeek's unique structure, as a subsidiary of High-Flyer hedge fund, allows for agility and rapid innovation. Owning its data centers provides full control over optimization. Its substantial investment in talent, with some researchers earning over $1.3 million annually, further underscores its commitment.

Image: ensigame.com

Image: ensigame.com

While DeepSeek's "budget-friendly" narrative is arguably inflated, its success highlights the potential of well-funded independent AI companies. The total investment exceeding $500 million, combined with technical breakthroughs and a strong team, is the true driver of its success. The contrast with competitors' costs, like ChatGPT4's $100 million training cost versus DeepSeek's $5 million for R1, remains stark. Ultimately, DeepSeek's story demonstrates that while significant investment is crucial, efficient resource management and innovation can still yield competitive results.